Editor’s Note: This blogpost is a repost of the original content published on 7 June 2023, by Luffistotle from Zee Prime Capital. Zee Prime Capital is a VC firm investing in programmable assets and early-stage founders globally. They call themselves a totally supercool and chilled VC (we tend to agree) investing in programmable assets, collaborative intelligence and other buzzwords. Luffistotle is an Investor at Zee Prime Capital. This blogpost represents the independent view of the author, who has given permission for this re-publication.

Table of Contents

-

- History of P2P

-

- Decentralized Storage Network Landscape

-

- FVM

-

- Permanent Storage

-

- Web 3’s First Commercial Market

-

- Consequences of Composability

Storage is a critical part of any computing stack. Without this fundamental element, nothing is possible. Through the continued advancement of computational resources, a great deal of excess and underutilized storage has been created. Distributed Storage Networks (DSNs) offer a way to coordinate and utilize these latent resources and turn them into productive assets. These networks have the potential to bring the first real commerce vertical into Web 3 ecosystems.

History of P2P

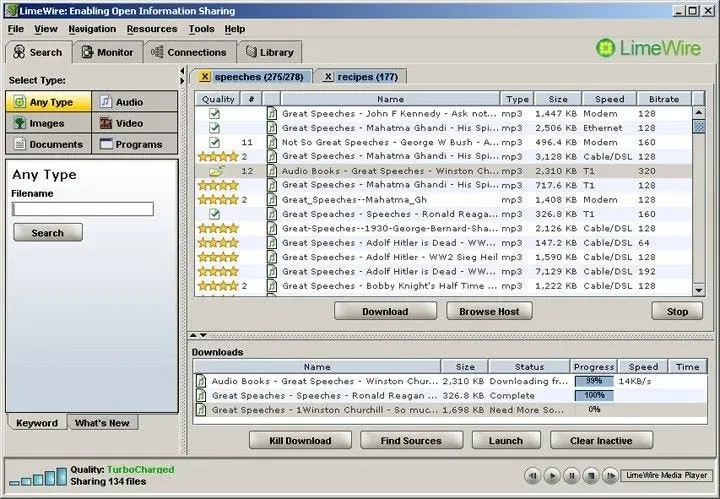

The history of real Peer-to-peer file sharing began to hit the mainstream with the advent of Napster. While there were early methods of sharing files on the internet before this, the mainstream finally joined with the sharing of MP3 files that Napster brought. From this initial starting point, the distributed systems world exploded with activity. The centralization within Napster’s model (for indexing) made it easy to shut down given its legal transgressions, however, it laid the foundation for more robust methods of file sharing.

The Gnutella Protocol followed this trailblazing and had many different effective front-ends leveraging the network in different ways. As a more decentralized version of the napstereqsue query network, it was much more robust to censorship. Even in its day, it experienced censorship. AOL had acquired the developing company Nullsoft, and quickly realized the potential, shutting distribution down almost immediately. However, it had already made it outside and was quickly reverse-engineered. Bearshare, Limewire, and Frostwire are likely the most notable of these front-end applications you may have encountered. Where it ultimately failed was the bandwidth requirements (a deeply limited resource at the time) combined with the lack of liveness and content guarantees.

Remember this? If not do not worry, it has been reborn as an NFT marketplace…

What came next was Bittorrent. This presented a level-up due to the two-sided nature of the protocol and its ability to maintain Distributed Hash Tables (DHTs). DHTs are important because they serve as a decentralized version of a ledger that stores the locations of files and is available for lookup by other participating nodes in the network.

After the advent of Bitcoin and blockchains, people started thinking big about how this novel coordination mechanism could be used to tie together networks of latent unused resources and commodities. What followed soon after was the development of DSNs.

Something that would perhaps surprise many people, is that the history of tokens and P2P networks goes back much farther than the existence of bitcoin and blockchains. What pioneers of these networks realized very quickly was a couple of the following points:

-

- Monetizing a useful protocol you have built is difficult as a result of forking. Even if you monetize a front end and serve ads or utilize other forms of monetization, a fork will likely undercut you.

-

- Not all usage is created equal. In the case of Gnutella, 70% of users did not share files and 50% of requests were for files hosted by the top 1% of hosts.

Power laws.

How does one remedy these problems? For BitTorrent it is seeding ratios (download/upload ratio), for others, it was the introduction of primitive token systems. Most often called credits or points they were allocated to incentivize good behavior (that promotes the health of the protocol) and stewardship of the network (like regulating content in the form of trust ratings). For a deeper dive into the broader history of all of this, I highly recommend these (now deleted, available via web archive) articles by John Backus:

Interestingly a DSN was part of the original vision for Ethereum. The “holy trinity” as it was called was meant to provide the necessary suite of tools for the world computer to flourish. Legend has it, that it was Gavin Wood’s idea for the concept of Swarm as the storage layer for Ethereum with Whisper as the messaging layer.

Mainstream DSNs followed and the rest is history.

Decentralized Storage Network Landscape

The decentralized storage landscape is most interesting because of the huge disparity between the size of the leader (Filecoin) and the other more nascent storage networks. While many people think of the storage landscape as two giants of Filecoin and Arweave, it would likely surprise most people that Arweave is the 4th largest by usage, behind Storj and Sia (although Sia seems to be declining in usage). And while we can readily question how legitimate the FIL data stored is, even if we handicapped it by say 90%, FIL usage is still ~400x Arweave.

What can we infer from this?

There is clear dominance in the market right now, but the continuity of this is dependent on the usefulness of these storage resources. The DSNs all roughly use the same architecture, node operators have a bunch of unused storage assets (hard drives), and they can pledge these to the network, mine blocks, and earn miner rewards for storing data. While the approaches to pricing and permanence may differ, the most important will be how easy and affordable retrieval and computation of the stored data is.

Fig 1. Storage Networks by Capacity and Usage

N.B:

-

- Arweave Capacity is not directly measurable; instead, node operators are always incentivized to have sufficient buffer and to increase supply to meet demand. How big is the buffer? Given the immeasurability of it, we can not know.

-

- Swarm’s actual network usage is impossible to tell, we can only look at how much storage has been paid for already. Whether it is used is unknown.

While this is the table of live projects, there are other DSNs in the works. These include ETH Storage, Maidsafe, and others.

FVM

Before going further it is probably worth noting that Filecoin has recently launched the Filecoin Ethereum Virtual Machine (FEVM). The FVM is a WASM VM that can support many different other runtimes on top via hypervisor. For instance, this recently launched FEVM is an Ethereum Virtual Machine runtime on top of the FVM/FIL network. The reason this is worth highlighting is that it facilitates the explosion of activity concerning smart contracts (i.e. stuff) on top of FIL. Before the March launch, there were 11 active smart contracts on FIL, following the FVM launch this has exploded. It benefits from composability in the form of leveraging all the work done in solidity to build out new businesses on top of FIL. This means innovations like quasi-liquid staking type primitives from teams like GLIF, and the various additional financialization of these markets you can build on top of such a platform. We believe this will accelerate storage providers because of the increases in capital efficiency (SPs need FIL to actively mine/seal storage deals). This differs from typical LSDs as there is an element of assessing the credit risk of the individual storage providers.

Permanent Storage

I believe Arweave gets the most airtime on this front, it has a flashy tagline that appeals to the deepest desires of Web 3 Participants:

Permanent Storage.

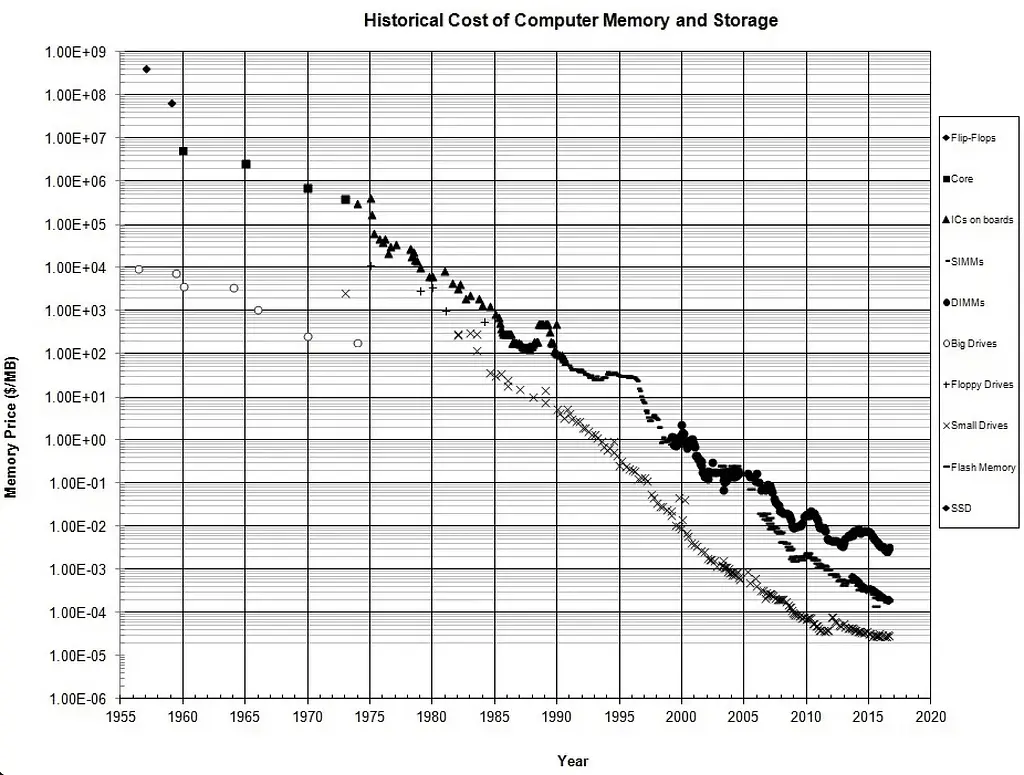

But what does this mean? It is an extremely desirable property, but in reality, execution is everything. Ultimately execution comes down to sustainability and cost for the end users. Arweave’s model is based on a pay-once, store forever (200 years upfront + deflation of storage value assumption) model. This kind of pricing model works well in a deflationary pricing environment of the underlying asset, as there is a constant goodwill accrual (i.e. old deals subsidize new deals) however the inverse is true in inflationary environments. History tells us this shouldn’t be an issue as the cost of computer storage has more or less been down only since inception but hard drive cost alone is not the whole picture.

Arweave creates permanent storage via the incentives of the Succinct Proof of Random Access (SPoRA) algorithm which incentivizes miners to store all the data and prove they can randomly produce a historical block. Doing so gives them a higher probability of being selected to create the next block (and earn the corresponding rewards).

While this model does a good job of getting node runners to want to store all of the data, it does not mean it is guaranteed to happen. Even if you set super high redundancy and use conservative heuristics to decide the parameters of the model, you can never get rid of this underlying risk of loss.

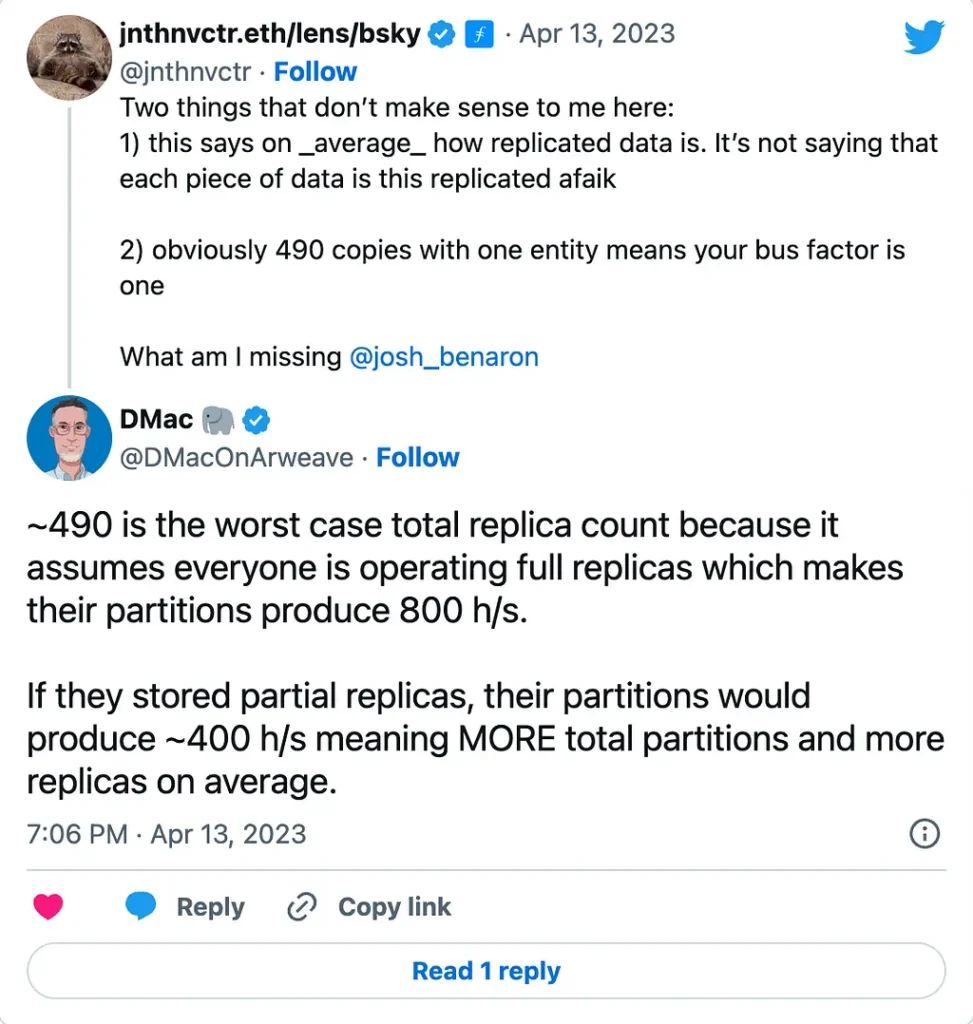

Source: Twitter

Fundamentally the only way to truly execute permanent storage would be to deterministically force somebody (everybody?) to and throw them in the gulag when they screw up. How do you properly incentivize personal responsibility such that you can achieve this? There is nothing wrong with the heuristic approach, but we need to identify the optimal way to achieve and price permanent storage.

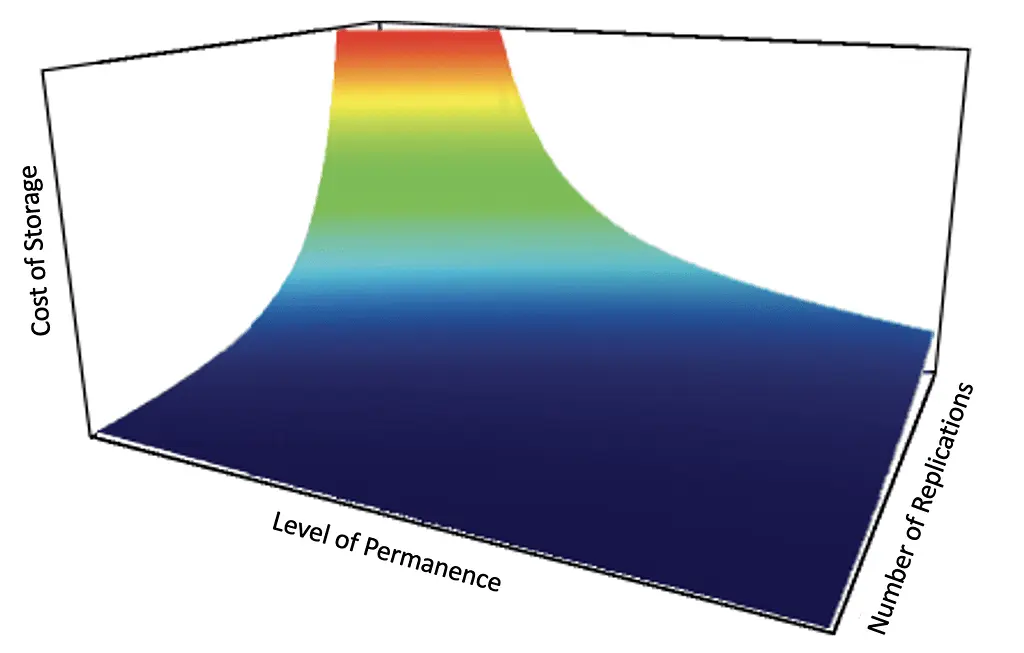

All of this is a long-winded way of getting to the point of asking what level of security we deem acceptable for permanent storage, and then we can think about that pricing over a given time frame. In reality, consumer preferences will fall along the spectrum of replication (permanence), and thus they should be able to decide what this level is and receive the corresponding pricing.

In traditional investing literature and research, there is infamous knowledge about how the benefits of diversification work on the overall risk of a portfolio. While adding stocks initially brings risk reduction to your portfolio, very quickly the diversification benefits of adding stock become more or less not valuable.

I believe the pricing of storage over and above some default standard of replication on the DSN should follow a similar curve but for cost and security of the storage with an increasing amount of replication.

For the future, I am most excited about what more DSNs with easily accessible smart contracting can bring to the market for permanent storage. I think overall consumers will benefit the most from this as the market opens up this spectrum of permanence.

For instance, in the chart above we can think of the area in green as the area of experimentation. It may be possible to achieve exponential decreases in the cost of that storage with minimal changes to the number of replications and level of permanence.

Additional ways of constructing permanence could come from replication across different storage networks rather than just within a single network. These kinds of routes are more ambitious but naturally lead to more differentiated levels of permanence. The biggest question here would be is there some kind of “permanence-free lunch” we could achieve by spreading it across DSNs in the same way we diversify market risk across a portfolio of publicly traded equities?

The answer could be yes, but it depends on node provider overlap and other complex factors. It could also be constructed via forms of insurance, possibly by node runners subjecting themselves to higher levels of slashing conditions in exchange for these assurances. Maintaining such a system would also be extremely complex as multiple codebases and coordination between them are required. Nonetheless, we look forward to this design scape expanding significantly and forwarding the general idea of permanent storage for our industry.

Web 3’s First Commercial Market

Matti tweeted recently about the promise of storage as the use case to bring Web 3 some real commerce. I believe this is likely.

I was having a conversation recently with a team from a layer one where I told them it is their moral imperative to fill their blockspace as stewards of the L1, but even more than this, it is to do this with economic activity. The industry often forgets the second part of its name.

The whole currency part.

Any protocol that launches a token that would not like to be down only is asking for some kind of economic activity to be conducted in that currency. For layer 1s it’s their native token, processing payments (executing computation) and charging a gas fee for doing so. The more economic activity happening, the more gas is used, and the more demand for their token. This is the crypto-economic model. For other protocols, it is likely some kind of middleware SaaS service.

What makes this model most interesting is when it is paired with some kind of commercial good, in the case of classical L1s it is computation. The problem with this is that as it pertains to something like financial transactions, having variable pricing on the execution is a horrible UX. The cost of execution should be the least important part of a financial transaction such as a swap.

What becomes difficult is filling this blockspace with economic activity in the face of this bad UX. While scaling solutions are on the way that will help stabilize this (I highly recommend this whitepaper on Interplanetary Consensus warning PDF), the flooded market of layer 1s makes it difficult to find enough activity for a given one.

This problem is much more addressable when you pair this computational capacity with some kind of additional commercial good. In the case of DSNs, this is storage. The economic activity of data being stored and the related elements such as financing and securitization of these storage providers is an immediate filler.

But this storage also needs to be a functional solution for traditional businesses to use. Particularly those who deal with regulations around how their data is stored. This most commonly comes in the form of auditing standards, geographical restrictions, and making the UX simple enough to use.

We’ve discussed Banyan before in our Middleware Thesis part 2, but their product is a fantastic step in the right direction on this front. Working with node operators in a DSN to secure SOC certifications for the storage being provided while offering a simple UX to facilitate the upload of your files.

But this alone is not enough.

The content stored also needs to be easily accessible with efficient retrieval markets. One thing we are very excited about at Zee Prime is the promise of creating a Content Distribution Network (CDN) on top of a DSN. A CDN is a tool to cache content close to the users and deliver improved latency when retrieving the content.

We believe this is the next critical component to making DSNs widely adaptable as this allows videos to load quickly (think building decentralized Netflix, Youtube, TikTok, etc.). One proxy to this space is our portfolio company Glitter, which focuses on indexing DSNs. This is important because it is a critical piece of infrastructure to improve the efficiency of retrieval markets and facilitate these more exciting use cases.

The potential for these kinds of products excites us as they have demonstrated PMF with high demand in Web 2. Despite this adoption, many face frictions that could benefit from leveraging the permissionless nature of Web 3 solutions.

Consequences of Composability

Interestingly, we think some of the best alpha on DSNs is hiding in plain sight. In these two pieces by Jnthnvctr he shares some great ideas on how these markets will evolve and the products that will come (on the Filecoin side):

One of the most interesting takeaways is the potential for pairing off-chain computation in addition to storage and on-chain computation. This is because of the natural computational needs of providing storage resources in the first place. This natural pairing can add commercial activity to flow through the DSN while opening up new use cases.

The launch of the FEVM makes many of these level-ups possible and will make the storage space much more interesting and competitive. For founders searching for new products to build, there is even a resource of all the products Protocol Labs is requesting people to build with potential grants available.

In Web 2 we learned that data has a kind of gravitational pull, where companies that collected/created a lot of it can reap the rewards and were accordingly incentivized to close it off in such a way to protect that.

If our dreams of user-controlled data solutions become mainstream, we can ask ourselves how the point where this value accrual happens changes. While users become primary beneficiaries, receiving cash flows in exchange for their data, no doubt monetization tools that unlock this potential also benefit, but where and how this data is stored and accessed also changes dramatically. Naturally, this kind of data can sit on DSNs which benefit from the usage of this data via robust query markets. This is a shift from exploitation toward flow.

What comes after could be extremely exciting.

When we think about the future of decentralized storage, it is fun to consider how it might interact with operating systems of the future like Urbit. For those unfamiliar, Urbit is a kind of personal server built with open-source software that allows you to participate in a peer-to-peer network. A true decentralized OS to do self-hosting and interact with the internet is a P2P way.

If the future plays out the way Urbit maximalists might hope, decentralized storage solutions undoubtedly become a critical piece of the individual stack. One can easily imagine hosting all their user-related data encrypted on one of the DSNs and coordinating actions via their Urbit OS. Further to this, we could expect further integrations with the rest of Web 3 and Urbit, especially with projects such as Uqbar Network, which brings smart contracting to your Nook environment.

These are the consequences of composability, the slow burn continues to build up exponentially until it delivers something really exciting. What feels like thumb twaddling becomes a revolution, an alternative path towards existing in a hyper-connected world. While Urbit might not be the end solution on this front (it has its criticisms), what it does show us is how these pieces can come together to open up a new river of exploration.